前言

作为Hadoop NameNode类启动分析篇的起始篇章。

我们先来了解一下FSDirectiry做了哪些功能点。

一切都因下面这段代码开始(一切的是命运之门的选择, 滑稽)

进入主题

我们先来看看NameNode的构造方法吧。

可以发现会创建一个FSNamesystem对象。

|

|

之后我们进入FSNamesystem观察

|

|

现在我们只关注FSDirectory类做了哪些功能即可。

为了观察方便, 我把需要研究的代码抽离出来了,并且写成一个测试类方便进行测试

|

|

|

|

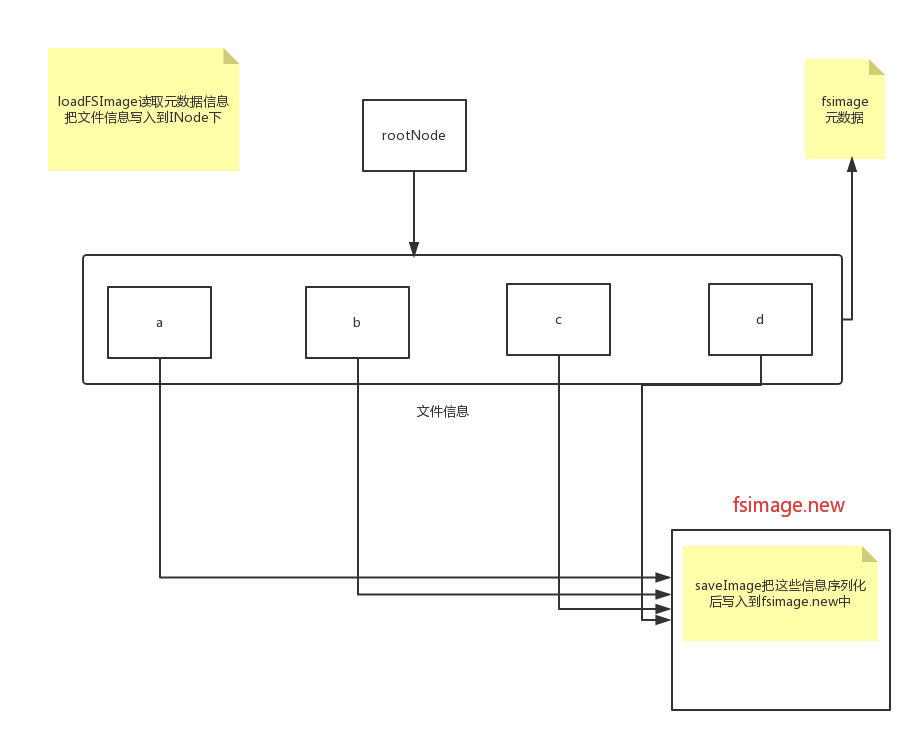

流程图?

也算不上是一个流程图,只不过把代码上一些内容以图片方式呈现。

写入到.new的文件后然后重命名为fsimage之后再删除.old的文件这样就算加载完毕了。